Chaining API Tests to Handle Complex Distributed System Testing

Learn how to create tests to validate complex user processes that require multiple API endpoints to be called in a particular sequence.

Table of Contents

Distributed system testing is complicated.

In this blog post, you’ll learn how to create tests to validate complex user processes that require multiple API endpoints to be called in a particular sequence.

With traditional testing, you need to configure multiple dependencies and manually create scripts to "glue" these API calls into a test suite.

With [Tracetest](https://tracetest.io/) you can build integration tests that assert against your distributed traces. [Tracetest Transactions](https://docs.tracetest.io/concepts/transactions/) simplify creating transactions with multiple tests that can validate a complex user process with observability traces.

I implemented these [transactions in the Tracetest repo here](https://github.com/kubeshop/tracetest/tree/main/tracetesting) so you can use a real example for your own implementation!

## Multistep Tests are Hard to Build

To verify the outcome of a complex user process through a backend test, you need to run multiple API calls in sequence. This is complex to set up because you need to configure dependencies for each API in the sequence. These tests end up being expensive in terms of time and resources.

Imagine you are developing a Web Store and you need to test the process of a user buying products. To complete the entire process, the user chooses a product in the store and adds it to a shopping cart, perhaps multiple times with different products. The user then goes to a checkout screen and adds payment info to complete the purchase.

To simulate this process, you need to use two use cases together:

- Add a product to a shopping cart:

```text

Use case: Add product to shopping cart.

As a consumer

I want to choose a product from the catalog

And add it to my shopping cart

So I can continue to explore the catalog to fulfill my shopping list.

```

- Complete the checkout:

```text

Use case: Checkout order.

As a consumer, after choosing products

I want to pay for all products in my shopping cart

So I can ship them to my address and use them.

```

Initially, it seems simple. First, create a test that calls the API that implements the first use case. Then, validate the second use case and check if the outcome is correct. The outcome you want is to have a paid order with the correct products and the shipping data filled, like this:

```python

def user_purchase(user_data, product_id):

cart_id = add_product_to_cart_api(user_data, product_id)

return checkout_order_api(user_data, cart_id)

def test_user_purchase(user_data):

setup_cart_api()

setup_checkout_api()

order_id = user_purchase(user_data, product_id = 1)

assert has_product_on_order(order_id, product_id)

assert is_order_paid(order_id)

assert is_shipping_data_filled(order_id, user_data)

```

However, as you are building the test, you discover two problems:

- Each API is written in a different language.

- The APIs have 7 dependencies to set up.

Since you have access to the source code of these services, there are two options available for your tests:

1. To mock the API calls and assume that the APIs will reply with the expected response and test only if the user purchase process chains both use cases correctly;

2. Try to containerize both APIs (with [Docker Compose](https://docs.docker.com/compose/) or even inside the code with a [test container](https://www.testcontainers.org/) lib) and set the data and dependencies for each API.

The first approach guarantees that your process is working at a high level and is faster to build and maintain, but, due to the mocks, this test needs to capture any underlying change that could be made on each API. The second approach improves this test by effectively testing the APIs together, which guarantees more reliability to the test but at the cost of knowing or learning some internals of each API and spending more time to set them up.

It is a difficult trade-off, where either option has its advantages and disadvantages. You want to run these tests as fast and effectively as possible. So you wonder: *"Is there an easier way to do these tests?"*

After examining the options, you discover that both your API that handles the user process and the Cart and Checkout API have one thing in common. Observability tools are enabled, and you can observe the entire process through tracing tools, like Jaeger. What if we test these integrations by tapping into this infrastructure?

## Testing Using your Observability Infrastructure

By having an observability infrastructure gather information about a set of API/microservices, we can have a concise view of the operation of these services and start thinking in an [observability-driven way to test your software](https://tracetest.io/blog/the-difference-between-tdd-and-odd).

[Tracetest](https://tracetest.io/) can help. When given an API endpoint, Tracetest checks observability traces to see if this API is behaving as intended.

For example, let’s try to test an [OpenTelemetry Astronomy Store](https://github.com/open-telemetry/opentelemetry-demo) which has the exact same use cases that we want to check.

To test the **"Add product to the shopping cart"** task, we can [create a test](https://docs.tracetest.io/web-ui/creating-tests), define a URL and payload in the **trigger** section that we send to the Cart API and use the **specs** to define our assertions, checking if the API was called with the correct Product ID and if this product was persisted correctly.

```yaml

type: Test

spec:

id: RPQ-oko4g

name: Add product to the cart

description: Add a selected product to user shopping cart

trigger:

type: http

httpRequest:

url: http://otel-store-demo-frontend:8080/api/cart

method: POST

headers:

- key: Content-Type

value: application/json

body: '{"item":{"productId":"OLJCESPC7Z","quantity":1},"userId":"2491f868-88f1-4345-8836-d5d8511a9f83"}'

specs:

- selector: span[tracetest.span.type="http" name="hipstershop.CartService/AddItem"]

# checking if the correct ProductID was sent

assertions:

- attr:app.product.id = "OLJCESPC7Z"

- selector: span[tracetest.span.type="database" name="HMSET" db.system="redis" db.redis.database_index="0"]

# checking if the product was persisted correctly on the shopping cart

assertions:

- attr:tracetest.selected_spans.count >= 1

```

We can run this test in Tracetest’s Web UI or with the CLI, with this command:

```bash

tracetest test run -d ./path-of-test.yml --wait-for-result

```

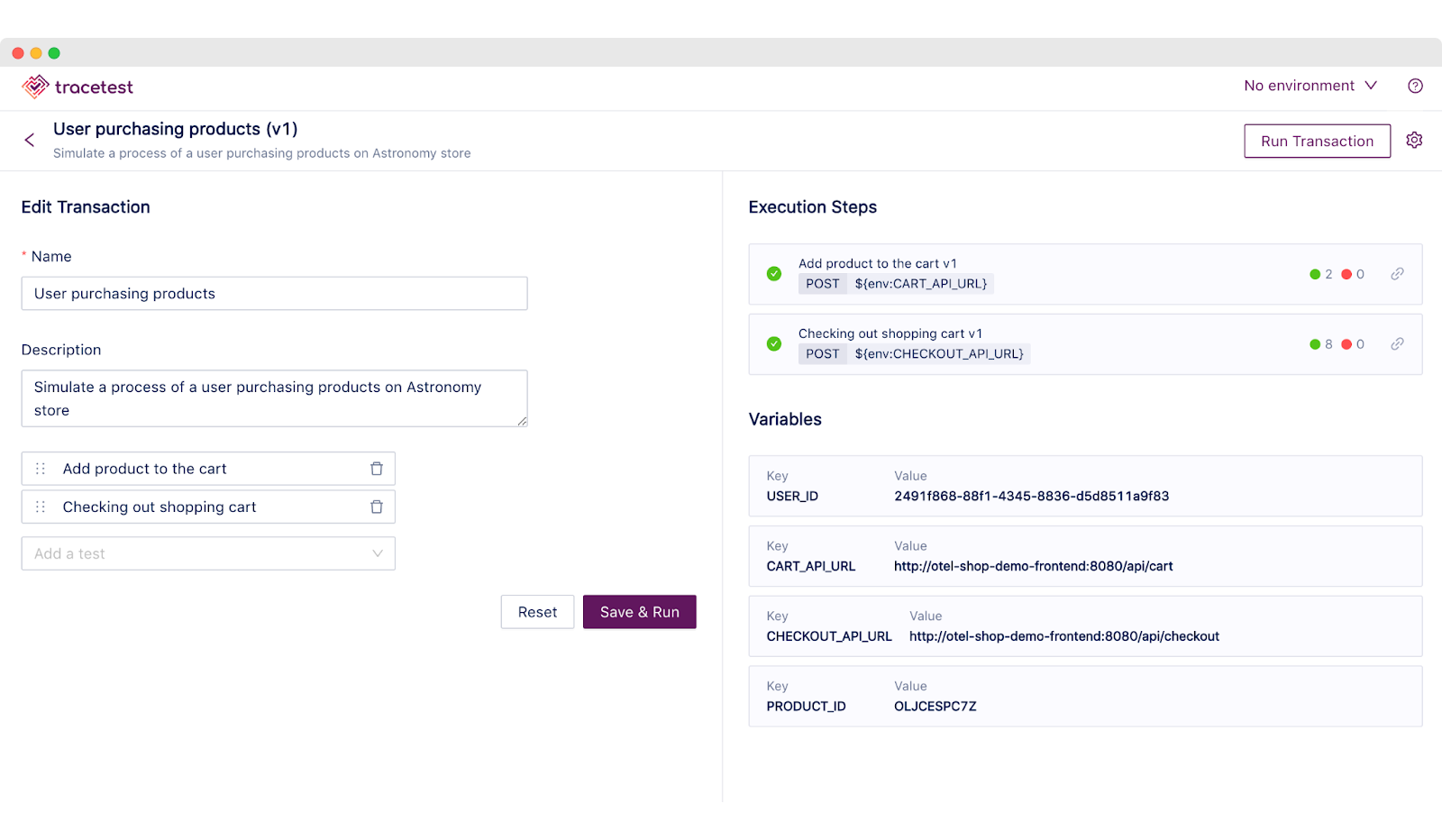

The result will look like this:

A similar test is used for checking out the product cart, creating another test for the Checkout API, similar to this:

```yaml

type: Test

spec:

id: PG1i9nT4g

name: Checking out shopping cart

description: Checking out shopping cart

trigger:

type: http

httpRequest:

url: http://otel-store-demo-frontend:8080/api/checkout

method: POST

headers:

- key: Content-Type

value: application/json

body: '{"userId":"2491f868-88f1-4345-8836-d5d8511a9f83","email":"someone@example.com","address":{"streetAddress":"1600

Amphitheatre Parkway","state":"CA","country":"United States","city":"Mountain View","zipCode":"94043"},"userCurrency":"USD","creditCard":{"creditCardCvv":672,"creditCardExpirationMonth":1,"creditCardExpirationYear":2030,"creditCardNumber":"4432-8015-6152-0454"}}'

specs:

- selector: span[tracetest.span.type="rpc" name="hipstershop.CheckoutService/PlaceOrder"

rpc.system="grpc" rpc.method="PlaceOrder" rpc.service="hipstershop.CheckoutService"]

assertions: # checking if a order was placed

- attr:app.user.id = "2491f868-88f1-4345-8836-d5d8511a9f83"

- attr:app.order.items.count = 1

- selector: span[tracetest.span.type="rpc" name="hipstershop.PaymentService/Charge"

rpc.system="grpc" rpc.method="Charge" rpc.service="hipstershop.PaymentService"]

assertions: # checking if the user was charged

- attr:rpc.grpc.status_code = 0

- attr:tracetest.selected_spans.count >= 1

- selector: span[tracetest.span.type="rpc" name="hipstershop.ShippingService/ShipOrder"

rpc.system="grpc" rpc.method="ShipOrder" rpc.service="hipstershop.ShippingService"]

assertions: # checking if the product was shipped

- attr:rpc.grpc.status_code = 0

- attr:tracetest.selected_spans.count >= 1

- selector: span[tracetest.span.type="rpc" name="hipstershop.CartService/EmptyCart"

rpc.system="grpc" rpc.method="EmptyCart" rpc.service="hipstershop.CartService"]

assertions: # checking if the cart was set empty

- attr:rpc.grpc.status_code = 0

- attr:tracetest.selected_spans.count >= 1

```

However, these tests are not chained into a transaction. That’s up next.

## Chaining Tests with Transactions

So far, you’ve only learned how to test each endpoint in a specific condition. Can you test the entire process of calling the Cart API and then the Checkout API in sequence, like in the Python code above?

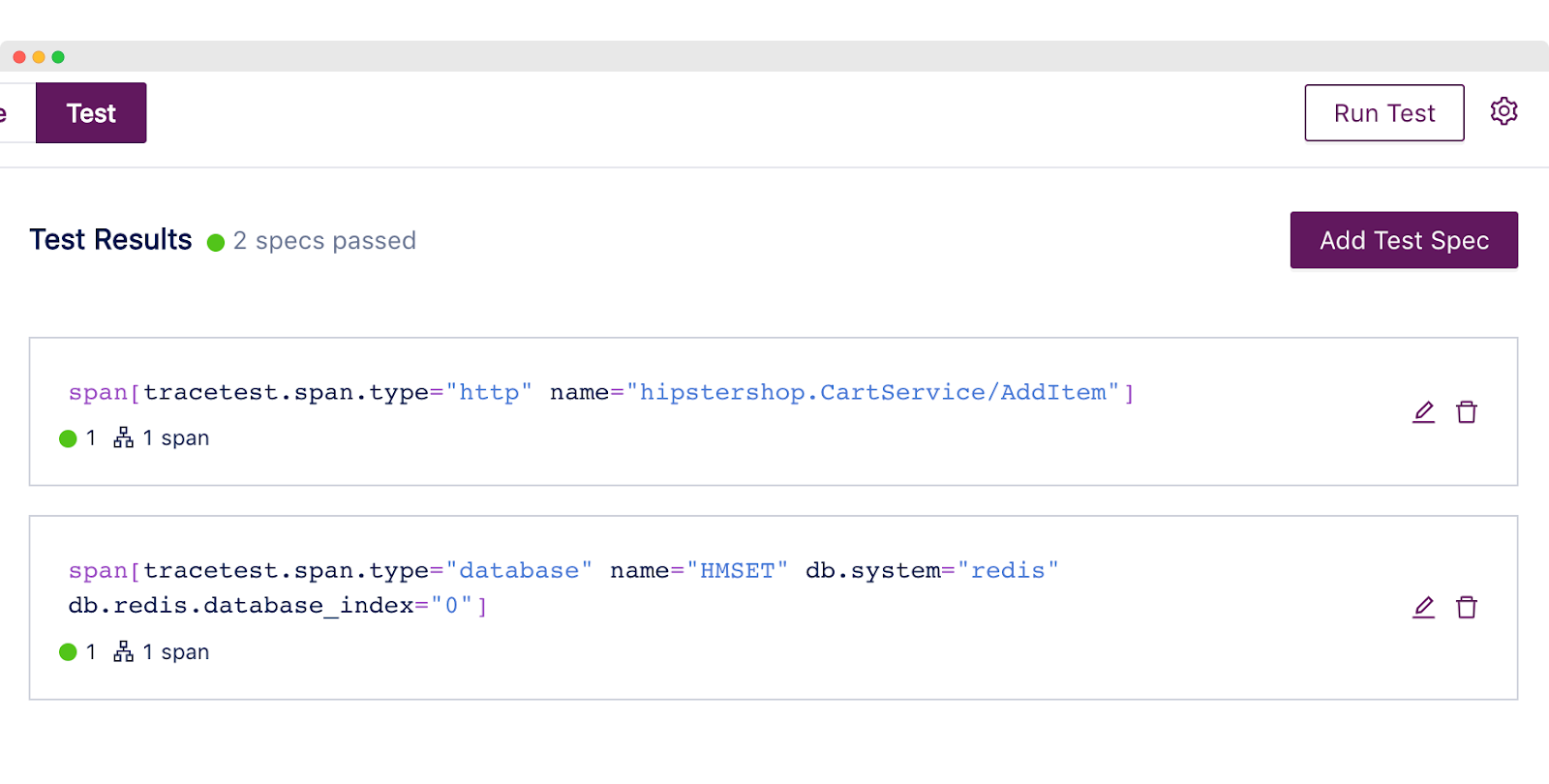

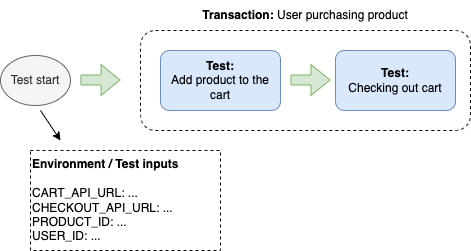

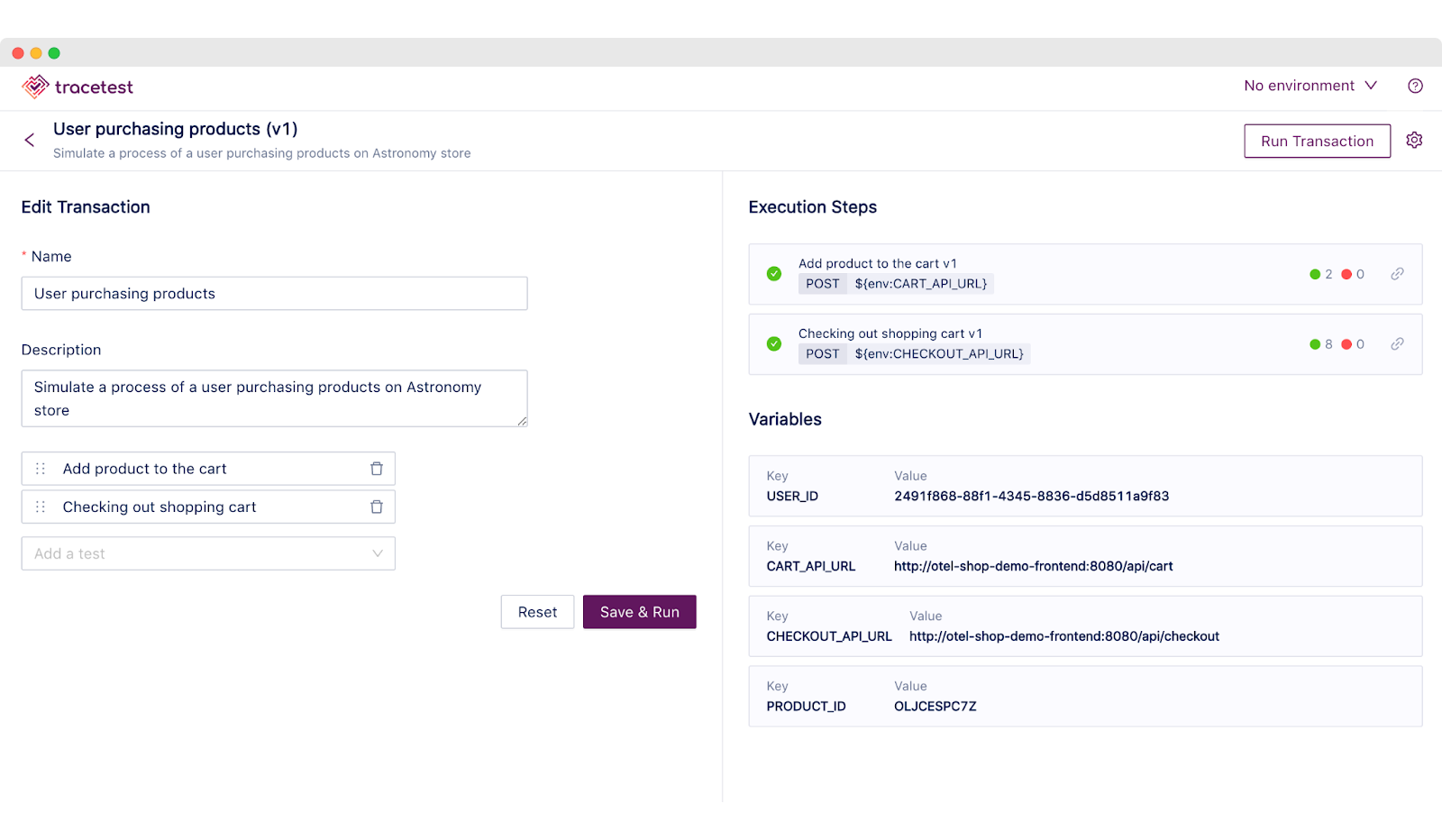

With Tracetest, the answer is **Yes**. You can use two Tracetest features: [Transactions](https://docs.tracetest.io/concepts/transactions), which allow you to chain tests and run them as a unique test suite, and [Environments](https://docs.tracetest.io/concepts/environments) which allow you to set up variables and use them across the tests. With both features, you can structure the test as the test_user_purchase function at the beginning of our article. Here’s how:

With a transaction, you can chain tests to run in sequence, marking it as **“successful”** if all tests are successful or marking it as **“failed”** if any test in the sequence failed. In Tracetest, you can define this transaction:

```yaml

type: Transaction

spec:

id: V72Ug4oVR

name: User purchasing products

description: Simulate a process of a user purchasing products on Astronomy store

steps:

- RPQ-oko4g # Test ID of "Add product to the cart"

- PG1i9nT4g # Test ID of "Checking out shopping cart"

```

After defining a transaction, you can refactor the tests to use environment variables and define them to serve as test inputs. Test inputs can be changed on test runs. The definition can be set like this:

```yaml

type: Environment

spec:

id: user-buying-products---env

name: User buying Products - Env

description: Environment for the process - "User buying products"

values:

- key: CART_API_URL

value: http://otel-store-demo-frontend:8080/api/cart

- key: CHECKOUT_API_URL

value: http://otel-store-demo-frontend:8080/api/checkout

- key: PRODUCT_ID

value: OLJCESPC7Z

- key: USER_ID

value: 2491f868-88f1-4345-8836-d5d8511a9f83

```

Or, if you’re using the Tracetest CLI, they can be set as a [dotenv](https://github.com/motdotla/dotenv) file which can be sent to the transaction execution like this:

```bash

# contents in .env file

CART_API_URL=http://otel-store-demo-frontend:8080/api/cart

CHECKOUT_API_URL=http://otel-demo-v2-frontend:8080/api/checkout

PRODUCT_ID=OLJCESPC7Z

USER_ID=2491f868-88f1-4345-8836-d5d8511a9f83

# execution on CLI

tracetest test run -e .env -d ./path-of-transaction.yml --wait-for-result

```

At the end of the transaction run, we will have a view like this, showing how this **“Purchase process”** was structured and tested:

## Conclusion

By using your observability infrastructure, Tracetest allows you to build complex and deep system tests in a simpler way. It uses declarative language that helps you create assertions against the behavior of an API more quickly.

With transactions, you can chain tests and check how APIs work together to determine if they are working as intended, providing valuable feedback to the teams that maintain those APIs.

Recently, at Tracetest we started using transactions to test our own engine, making a sequence of tests to guarantee that each feature is working effectively. You can see more details about our implementation of transactions [here](https://github.com/kubeshop/tracetest/tree/main/tracetesting).

Ready to build more complex chained tests against your microservice-based app? [Download and install Tracetest](https://tracetest.io/download) into either Docker or Kubernetes, select what trace data store you are using to collect and store your distributed traces and get started in just 5 minutes.

The Tracetest team is eager to hear from you - reach out to us in our [Slack channel](https://dub.sh/tracetest-community) or create a [GitHub issue](https://github.com/kubeshop/tracetest/issues/new/choose) for any additional capability you need. If you like what we are doing - please give us a star at [github.com/kubeshop/tracetest](https://github.com/kubeshop/tracetest/).

.jpg)

.avif)

.avif)